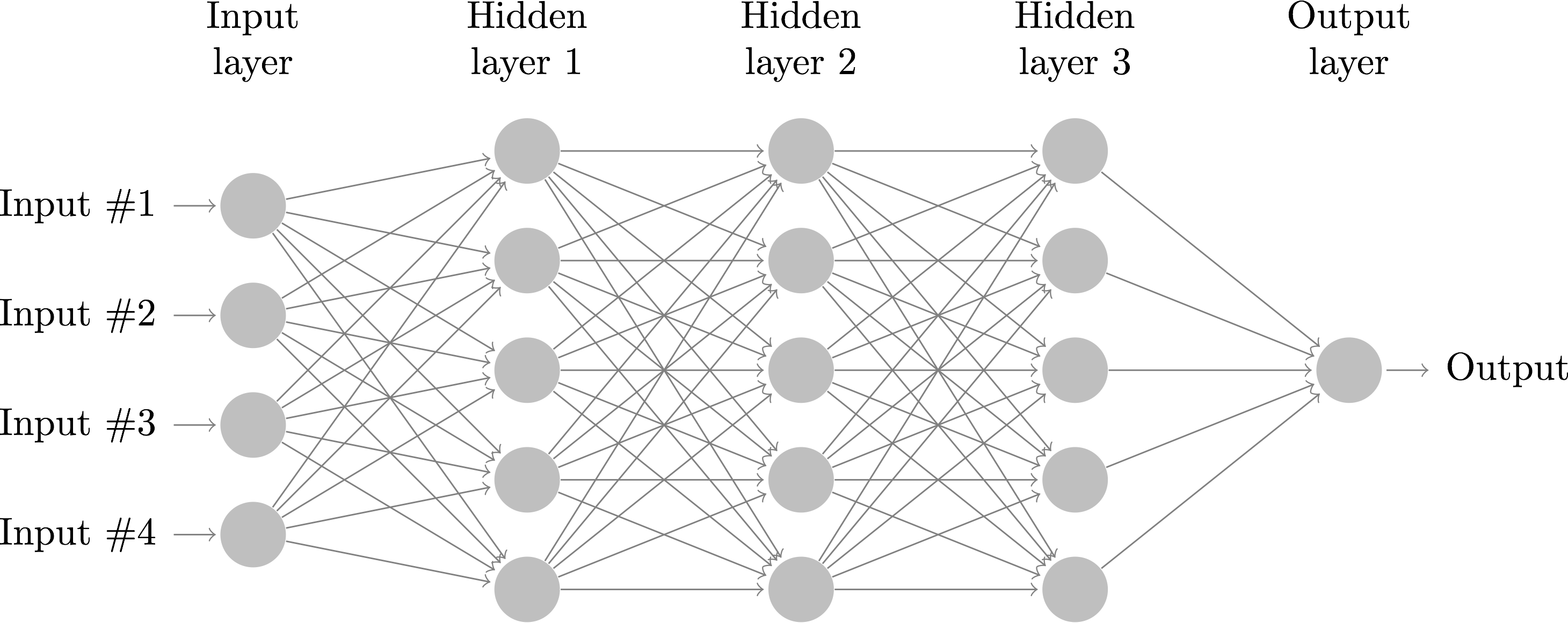

Your first task is to implement a small neural network with sigmoid activation functions, trained by backpropagation. The neural network is made of node objects, including SigmoidNode neurons for which you will implement several methods:

The first three functions store their results in the node's self.activation and self.delta fields. update_weights changes the weights of the edges stored in self.in_edges.

The Network class is already set up to initialize a neural network with input, bias, and sigmoid nodes. You must implement the following functions for the neural network:

Much more detail on all of these functions can be found in the comments in neural_net.py. The main function sets up a network with a single two-neuron hidden layer and trains it to represent the function XOR.

The file nn_test.py implements unit tests for the various functions you will be implementing. It is strongly recommended that you test each function as you implement it, as debugging the entire training algorithm is an extremely difficult task.

For this part of the lab, you will be classifying images from the MNIST handwritten digit data set. Each input is a 28x28 array of integers between 0 and 255 representing grayscale images. The corresponding labels are digits 0–9.

We will be using a Jupyter notebook to demonstrate the Keras python library (though you can of course create Keras neural networks in ordinary python programs as well). To import Keras or run the Jupyter notebook, you first need to activate the virual environment:

source /usr/swat/bin/CS63env

You can deactivate the virtual environment at any time with the command deactivate. To start up Jupyter, you should first navigate to your lab directory, then run:

jupyter notebook

The starting point notebook demonstrates setting up the inputs for training a neural network using the Keras library. The initial network is set up with parameters that should be familiar from lecture and part 1:

With these parameters, the network achieves only ~60% accuracy on the test set. Your task is to vary the network architecture and parameters to achieve 97% or better accuracy. To get started, try adding an additional hidden layer with ReLU activation. Here is a list of things you may want to try varying:

If trial-and-error doesn't get you to 97% accuracy, you should try searching for what others have done. Training neural networks on the MNIST data set is common enough that you should be able to find lots of relevant google results.

You have been provided with a latex document called nn.tex, where you have two sections to complete. In the first section, you should fill in the weights found by your neural network from part 1, using a non-zero random_seed of your choosing (for which learning converges). You should then explain the weights that the neural network learned and how the network is computing XOR. In particular, you should be able to recognize a specific Boolean function (a.k.a. logic gate) corresponding to each hidden/output node.

In the second section, you will describe the parameters you found that achieved over 97% accuracy on the MNIST handwritten digit classification task. You should describe how you came up with them or cite where you found them. Then you should explain what features of the parameters you found make them more successful than the defaults that we started with. Be sure that your descriptions are sufficiently detailed that the reader can re-create your network and reproduce your results.

You can edit the nn.tex file with any text editor, such as gvim. There's lots of great help available online for latex; just google "latex topic" to find lots of tutorials and stack exchange posts. To compile your latex document into a pdf, run the following command:

pdflatex nn.texYou can then open the pdf from the command line with the command xdg-open, which as the same effect as double clicking an icon in the file browser. Feel free to use services like sharelatex to edit your latex file.