Week 10: Sorting Algorithms

Week 10 Goals

-

Sorting Algorithms

-

Complexity Analysis of algorithms

Week 10 Files

-

sorting.py: start of a program to implement and time some sorting algorithms -

time_binary_search.py,time_linear_search.py: two programs that time binary and linear searching algorithms.

Sorting

Like searching, sorting is a fundamental operation on data. And as we saw last week, when a set of data are in sorted order, there are often faster algorithms we can use for searching.

Many applications use sorting and searching, and choosing the right algorithm (or developing a new better one) can have a huge impact on a program’s performance. In fact, since the mid 1980s, there has been an annual sorting competition (sortbenchmark.org) among CS researchers to see who can design the best sorting algorithm (or design a better implementation of an existing one) to sort data better based on several metrics (faster, more energy efficiently, cheapest, …). The sorting competition was started by Jim Grey who did foundational work in database systems (which led to his being awarded the Turing Award in 1989 (the Turing Award is like the Nobel Prize in CS). Databases are often used to store large data sets, and data access efficiency is an important area in database systems research.

We are going to develop some sorting algorithms this week (probably not award winning ones), and analyze their performance using Big-O analysis.

Comparing Algorithms

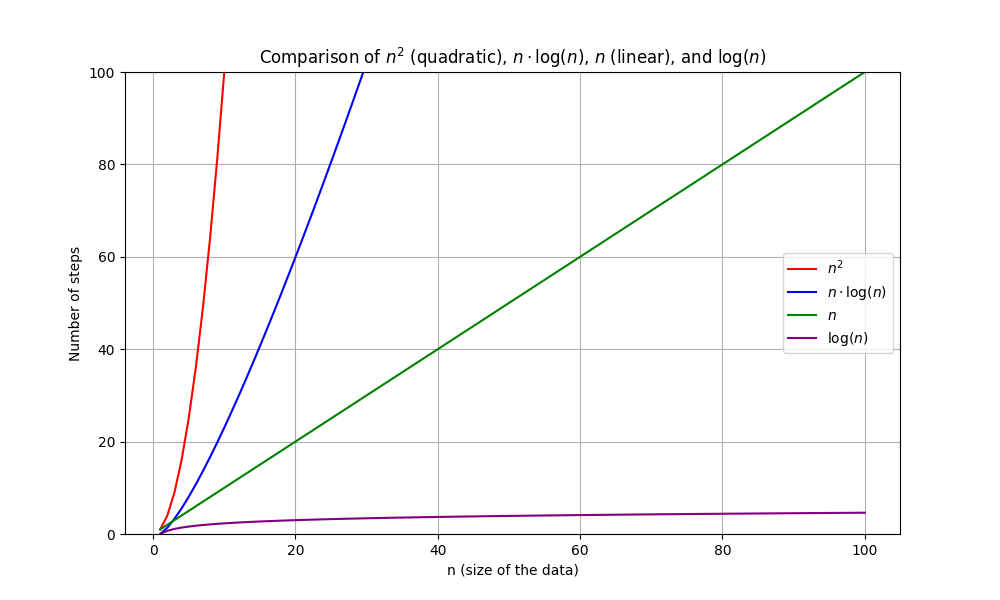

As we compare algorithms by different functions of the number of steps they take for a particular problem size (N), it is often helpful to view a graph of these functions to get a sense of how each grows with the problem size (N). Here is a graph showing logrithmic (log N and NlogN), linear (N), and quadradic(N2) functions: